How Search engines

Imagine the World Wide Web as a network of stops in a big city subway system.

Each stop is a unique document (usually a web page, but sometimes a PDF, JPG, or other file). The search engines need a way to “crawl” the entire city and find all the stops along the way, so they use the best path available—links.

The link structure of the web serves to bind all of the pages together.

Links allow the search engines' automated robots, called "crawlers" or "spiders," to reach the many billions of interconnected documents on the web.

Once the engines find these pages, they decipher the code from them and store selected pieces in massive databases, to be recalled later when needed for a search query. To accomplish the monumental task of holding billions of pages that can be accessed in a fraction of a second, the search engine companies have constructed datacenters all over the world.

These monstrous storage facilities hold thousands of machines processing large quantities of information very quickly. When a person performs a search at any of the major engines, they demand results instantaneously; even a one- or two-second delay can cause dissatisfaction, so the engines work hard to provide answers as fast as possible.

Search engines are answer machines. When a person performs an online search, the search engine scours its corpus of billions of documents and does two things: first, it returns only those results that are relevant or useful to the searcher's query; second, it ranks those results according to the popularity of the websites serving the information. It is bothrelevance and popularity that the process of SEO is meant to influence.

How do search engines determine relevance and popularity?

To a search engine, relevance means more than finding a page with the right words. In the early days of the web, search engines didn’t go much further than this simplistic step, and search results were of limited value. Over the years, smart engineers have devised better ways to match results to searchers’ queries. Today, hundreds of factors influence relevance, and we’ll discuss the most important of these in this guide.

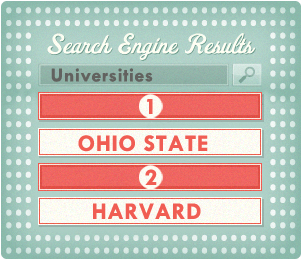

Search engines typically assume that the more popular a site, page, or document, the more valuable the information it contains must be. This assumption has proven fairly successful in terms of user satisfaction with search results.

Popularity and relevance aren’t determined manually. Instead, the engines employ mathematical equations (algorithms) to sort the wheat from the chaff (relevance), and then to rank the wheat in order of quality (popularity).

These algorithms often comprise hundreds of variables. In the search marketing field, we refer to them as “ranking factors.” Moz crafted a resource specifically on this subject: Search Engine Ranking Factors.

You can surmise that search engines believe that Ohio State is the mostrelevant and popular page for the query “Universities” while the page for Harvard is less relevant/popular.

How Do I Get Some Success Rolling In?

Or, "how search marketers succeed"

The complicated algorithms of search engines may seem impenetrable. Indeed, the engines themselves provide little insight into how to achieve better results or garner more traffic. What they do provide us about optimization and best practices is described below:

SEO INFORMATION FROM GOOGLE WEBMASTER GUIDELINES

Google recommends the following to get better rankings in their search engine:

- Make pages primarily for users, not for search engines. Don't deceive your users or present different content to search engines than you display to users, a practice commonly referred to as "cloaking."

- Make a site with a clear hierarchy and text links. Every page should be reachable from at least one static text link.

- Create a useful, information-rich site, and write pages that clearly and accurately describe your content. Make sure that your <title> elements and ALT attributes are descriptive and accurate.

- Use keywords to create descriptive, human-friendly URLs. Provide one version of a URL to reach a document, using 301 redirects or the rel="canonical" attribute to address duplicate content.

SEO INFORMATION FROM BING WEBMASTER GUIDELINES

Bing engineers at Microsoft recommend the following to get better rankings in their search engine:

- Ensure a clean, keyword rich URL structure is in place.

- Make sure content is not buried inside rich media (Adobe Flash Player, JavaScript, Ajax) and verify that rich media doesn't hide links from crawlers.

- Create keyword-rich content and match keywords to what users are searching for. Produce fresh content regularly.

- Don’t put the text that you want indexed inside images. For example, if you want your company name or address to be indexed, make sure it is not displayed inside a company logo.

Have No Fear, Fellow Search Marketer!

In addition to this freely-given advice, over the 15+ years that web search has existed, search marketers have found methods to extract information about how the search engines rank pages. SEOs and marketers use that data to help their sites and their clients achieve better positioning.

Surprisingly, the engines support many of these efforts, though the public visibility is frequently low. Conferences on search marketing, such as the Search Marketing Expo,Pubcon, Search Engine Strategies, Distilled, and Moz’s own MozCon attract engineers and representatives from all of the major engines. Search representatives also assist webmasters by occasionally participating online in blogs, forums, and groups.

There is perhaps no greater tool available to webmasters researching the activities of the engines than the freedom to use the search engines themselves to perform experiments, test hypotheses, and form opinions. It is through this iterative—sometimes painstaking—process that a considerable amount of knowledge about the functions of the engines has been gleaned. Some of the experiments we’ve tried go something like this:

- Register a new website with nonsense keywords (e.g., ishkabibbell.com).

- Create multiple pages on that website, all targeting a similarly ludicrous term (e.g., yoogewgally).

- Make the pages as close to identical as possible, then alter one variable at a time, experimenting with placement of text, formatting, use of keywords, link structures, etc.

- Point links at the domain from indexed, well-crawled pages on other domains.

- Record the rankings of the pages in search engines.

- Now make small alterations to the pages and assess their impact on search results to determine what factors might push a result up or down against its peers.

- Record any results that appear to be effective, and re-test them on other domains or with other terms. If several tests consistently return the same results, chances are you’ve discovered a pattern that is used by the search engines.

An Example Test We Performed

In our test, we started with the hypothesis that a link earlier (higher up) on a page carries more weight than a link lower down on the page. We tested this by creating a nonsense domain with a home page with links to three remote pages that all have the same nonsense word appearing exactly once on the page. After the search engines crawled the pages, we found that the page with the earliest link on the home page ranked first.

This process is useful, but is not alone in helping to educate search marketers.

In addition to this kind of testing, search marketers can also glean competitive intelligence about how the search engines work through patent applications made by the major engines to the United States Patent Office. Perhaps the most famous among these is the system that gave rise to Google in the Stanford dormitories during the late 1990s, PageRank, documented as Patent #6285999: "Method for node ranking in a linked database." The original paper on the subject – Anatomy of a Large-Scale Hypertextual Web Search Engine – has also been the subject of considerable study. But don't worry; you don't have to go back and take remedial calculus in order to practice SEO!

Through methods like patent analysis, experiments, and live testing, search marketers as a community have come to understand many of the basic operations of search engines and the critical components of creating websites and pages that earn high rankings and significant traffic.

The rest of this guide is devoted to clarifying these insights. Enjoy!

How Search engines

![How Search engines]() Reviewed by Unknown

on

5:31 AM

Rating:

Reviewed by Unknown

on

5:31 AM

Rating:

No comments: